Well, well, well.. Yeap, you read it correctly, hijacking detectportal.firefox.com. But why would one want to do this?

Let's 1st summarize what's the idea behind this FQDN:

- FF is initiating connections to this FQDN on regular basis per default.

- FF attempt to detect the presence of a captive portal by attempting to download the file success.txt from http://detectportal.firefox.com/success.txt

- That file consist of one word only; "success".

- If FF can successfully retrieve the file, it assumes that it is not constrained by a captive portal.

- If the reply is a redirect, FF knows that there is a captive portal and will pop up a Network Login Needed warning.

Below is an example of a FF captive portal detected "Network Login" invitation.

Now that we know a little more about the behaviors, let me lists the things I don't really like about this:

- Such traffic is beaconing constantly.

- I'm not a fan of such traffic leaving my premises.

- HTTP traffic is prone to manipulations.

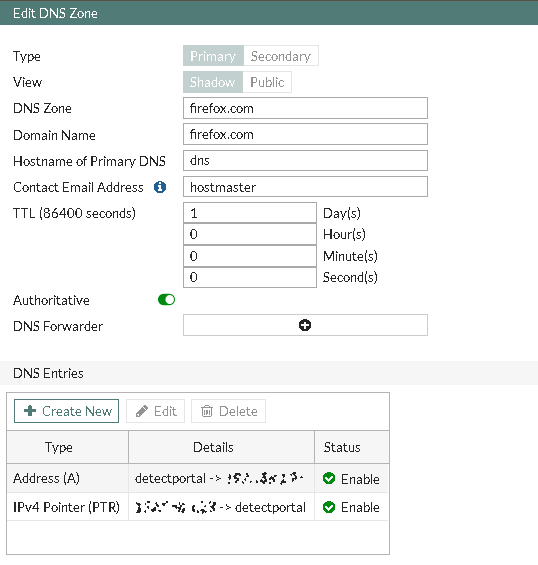

Hence, let me show you what I've done in order to circumvent these behaviors and emulate the exact same features this while being hosted locally. The 1st thing we'll need is to redirect the targeted FQDN to something we control, this through FortiOS:

As displayed above, I've made a FortiGate controlled DNS zone named "firefox.com" which hosts a domain name of "firefox.com" made of a single DNS entry; detectportal on which the in our control bound IP address (a local one, seated behind any Captive Portal enabled policies etc, for me in a DMZ) run's a very basic nginx web server config running on TCP:80.

In my hack here, I've been hosting this webserver onto the WebStation app based on a Synology device. Below you'll find the configuration files involved. Here is the main webstation-vhost.conf:

server {

listen 80;

listen [::]:80;

server_name detectportal.firefox.com;

root "/www/detectportal";

index index.html index.htm index.cgi index.php index.php5 ;

error_page 400 401 402 403 404 405 406 407 408 500 501 502 503 504 505 @error_page;

location @error_page {

root /var/packages/WebStation/target/error_page;

rewrite ^ /$status.html break;

}

location ^~ /_webstation_/ {

alias /var/packages/WebStation/target/error_page/;

}

include /usr/local/etc/nginx/conf.d/detecportal/user.conf*;

}And here is the included user.conf contents (included from webstation-vhost.conf):

location / {

default_type application/octet-stream;

add_header Cache-Control "public, must-revalidate, max-age=0, s-maxage=86400";

return 200 'success\n';

}And finally, the needed success.txt file seating in the web root:

robin@mommy:/www/detectportal$ echo -n success > success.txt

robin@mommy:/www/detectportal$ cat success.txt

successrobin@mommy:/www/detectportal$

This file need's to host only the word success.

Now let's review a standard "detectportal.firefox.com" curl request:

λ curl -v http://detectportal.firefox.com

* Rebuilt URL to: http://detectportal.firefox.com/

* Trying 34.107.221.82...

* TCP_NODELAY set

* Connected to detectportal.firefox.com (34.107.221.82) port 80 (#0)

> GET / HTTP/1.1

> Host: detectportal.firefox.com

> User-Agent: curl/7.55.1

> Accept:

>

< HTTP/1.1 200 OK

< Server: nginx

< Date: Tue, 08 Dec 2020 07:47:34 GMT

< Content-Type: application/octet-stream

< Content-Length: 8

< Via: 1.1 google

< Age: 56780

< Cache-Control: public, must-revalidate, max-age=0, s-maxage=86400

<

success

* Connection #0 to host detectportal.firefox.com left intact

λ curl -v http://detectportal.firefox.com/success.txt

* Trying 34.107.221.82...

* TCP_NODELAY set

* Connected to detectportal.firefox.com (34.107.221.82) port 80 (#0)

> GET /success.txt HTTP/1.1

> Host: detectportal.firefox.com

> User-Agent: curl/7.55.1

> Accept:

>

< HTTP/1.1 200 OK

< Server: nginx

< Date: Tue, 08 Dec 2020 07:47:42 GMT

< Content-Type: text/plain

< Content-Length: 8

< Via: 1.1 google

< Age: 49627

< Cache-Control: public, must-revalidate, max-age=0, s-maxage=86400

<

success

* Connection #0 to host detectportal.firefox.com left intactAnd here we see an hijacked "detectportal.firefox.com" request:

curl -v http://detectportal.firefox.com

* Rebuilt URL to: http://detectportal.firefox.com/

* Trying 10.23.23.23...

* TCP_NODELAY set

* Connected to detectportal.firefox.com (10.23.23.23) port 80 (#0)

> GET / HTTP/1.1

> Host: detectportal.firefox.com

> User-Agent: curl/7.55.1

> Accept:

>

< HTTP/1.1 200 OK

< Server: nginx

< Date: Tue, 08 Dec 2020 07:48:20 GMT

< Content-Type: application/octet-stream

< Content-Length: 8

< Connection: keep-alive

< Keep-Alive: timeout=20

< Cache-Control: public, must-revalidate, max-age=0, s-maxage=86400

<

success

* Connection #0 to host detectportal.firefox.com left intact

curl -v http://detectportal.firefox.com/success.txt

* Trying 10.23.23.23...

* TCP_NODELAY set

* Connected to detectportal.firefox.com (10.23.23.23) port 80 (#0)

> GET /success.txt HTTP/1.1

> Host: detectportal.firefox.com

> User-Agent: curl/7.55.1

> Accept:

>

< HTTP/1.1 200 OK

< Server: nginx

< Date: Tue, 08 Dec 2020 07:48:31 GMT

< Content-Type: text/plain

< Content-Length: 8

< Connection: keep-alive

< Keep-Alive: timeout=20

< Cache-Control: public, must-revalidate, max-age=0, s-maxage=86400

<

success

* Connection #0 to host detectportal.firefox.com left intact As we can witness, the results are exactly the same, we're just trusting our own environments a tiny notch more than anything beyond =)

If you don't want the captive portal FF checks to occur, you can turn them off by typing about:config in the address field in FF. In the Search field, type "captive". You should see a list of entries, one of which is network.captive-portal-service.enabled. It's default value being "true".

In the hope you've found that useful.

Cheers,

Obuno

Image credits: Captive State (2919) - Directed By Rupert Wyatt